The ABC’s of Splunk Part Five: Splunk CheatSheet

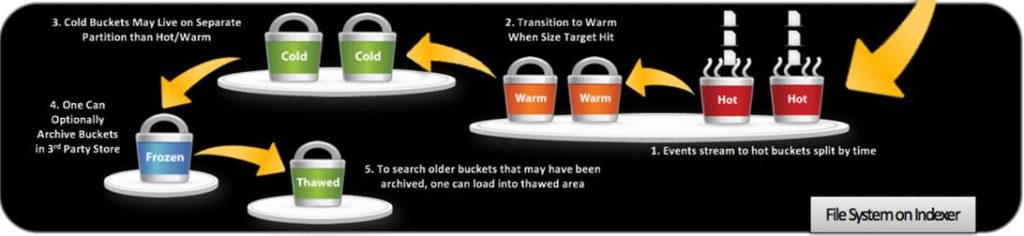

In the past few blogs, I wrote about which environments to choose whether – clustered or standalone, how to configure on Linux, how to manage the storage over time, and the deployment server.

If you haven’t read our previous blogs, get caught up here! Part 1, Part 2, Part 3, Part 4

For this blog, I decided to switch it around and provide you with a CheatSheet (takes me back to high school) for the items that you will need through your installation process which are sometimes hard to find.

This blog will be split into two sections: Splunk and Linux CheatSheets

Splunk CheatSheet:

1: Management Commands

$SPLUNK_HOME$/bin/splunk status – To check Splunk status

$SPLUNK_HOME$/bin/splunk start – To start the Splunk processes

$SPLUNK_HOME$/bin/splunk stop – To stop the Splunk processes

$SPLUNK_HOME$/bin/splunk restart – To restart the Splunk

2: How to Check Licensing Usage

Go to “Settings” > “Licensing”.

For a more detailed report go to “Settings” > “Monitoring Console” > “Indexing” > “Licence Usage”

3: How to delete index Data: You’re Done Configuring Your Installation But You Have Lots of Logs Going into an Old Indexer and or Data That You No Longer Need But is Taking Space.

Clean Index Data (Note: you cannot recover these logs once you issue the command)

$SPLUNK_HOME$/bin/splunk clean eventdata -index

If you do not provide -index argument, that will clear all the indexes.

Do to apply this command directly in the clustered environment.

4: Changing your TimeZone (Per User)

Click on your username on the top navigation bar and select “Preferences”.

5: Search Commands That Are Nice To Know For Beginners

Index=”name of index you’re trying to search. E.g “pan_log” for Palo Alto firewalls”

Sourcetype=”name of sourcetype for the items you are looking for. E.g. “pan:traffic, pan:userid, pan:threat, pan:system”

| dedup : allows you to remove all events of similar output – for instance if you dedup on user and your firewall is generating logs for all user activity, you will not see all the activity of the user, just all the distinct users

| stats: Calculates aggregate statistics, such as average, count, and sum, over the results set

| stats count by rule : Will show you the number of events that matches any specific rule on your firewall

How to get actual event ingestion time?

As most of you may know, the _time field in the events in Splunk is not always the event ingestion time. So, how to get event ingestion time in Splunk? You can get that with the _indextime field.

| eval it=strftime(_indextime, “%F %T”) | table it, _time, other_fields

Search for where the packets are coming to a receiving port

index=_internal source=*metrics.log tcpin_connections or udpin_connections

Linux CheatSheet:

User Operations

whoami – Which user is active. Useful to verify you are using the correct user to make configuration changes in the backend.

chown -R : – Change the owner of directory.

Directory Operations

mv – Moving file or directory to new location.

mv – Renaming a file or directory.

cp – Copy a file to a new location.

cp -r – Copy a directory to the new location.

rm -rf – Remove file or directory.

Get Size

df -h – Get disk usage (in human-readable size unit)

du -sh * – Get the size of all the directories under the current directory.

watch df -h – Monitor disk usage (in human-readable size unit). Update stats every two seconds. Press Ctrl+C to exit.

watch du -sh * – Get size of all the directories under the current directory. Update stats every two seconds. Press Ctrl+C to exit.

Processes

ps -aux – List all the running processes.

top – Get resource utilization statistics by the processes

Work with Files

vi – Open and edit the file with VI editor

tail -f – Tail the log file (will display the content of the log file. Unlike cat, touch, or vi it displays the live logs coming to the file.

Networking

ifconfig – To get the IP address of the machine

Written by Usama Houlila.

Any questions, comments, or feedback are appreciated! Leave a comment or send me an email to uhoulila@newtheme.jlizardo.com for any questions you might have.