Case Study #1: Rural Hospitals and New Technologies: Leading the Way in Business Continuity

The purpose of this series is to shed light onto the evolving nature of Business Continuity, across all industries. If you have an outdated plan, the likelihood of success in a real scenario is most certainly diminished. Many of our clients already have a plan in place, but as we start testing, we have to make changes or redesign the solution altogether. Sometimes the Business Continuity plan is perfect, but does not include changes that were made recently – such as new applications, new business lines/offices, etc.

In each scenario, the customer’s name will not be shared. However, their business and technical challenges as they relate to Business Continuity will be discussed in detail.

Introduction

This case study concerns a rural hospital in the Midwest United States. Rural hospitals face many challenges, mainly in the fact that they serve poorer communities with fewer reimbursements and a lower occupancy rate than their metropolitan competition. Despite this, the hospital was able to surmount these difficulties and achieve an infrastructure that is just as modern and on the leading edge as most major hospital systems.

Background

Our client needed to test their existing Disaster Recovery plan and develop a more comprehensive Business Continuity plan to ensure compliance and seamless healthcare delivery in case of an emergency. This particular client has one main hospital and a network of nine clinics and doctor’s offices.

The primary items of concern were:

- Connectivity: How are the hospital and clinics interconnected, and what risks can lead to a short or long-term disruption?

- Medical Services: Which of their current systems are crucial for them to continue to function, whether they are part of their current disaster recovery plan, and whether or not they have been tested.

- Telecommunication Services: Phone system and patient scheduling.

- Compliance: If the Disaster Recovery system becomes active, especially for an extended period of time, the Cyber Security risk will increase as more healthcare practitioners use the backup system, and, by default, expose it to items in the wild that might currently exist, but have never impacted the existing live system.

After a few days of audit, discussions, and discovery, the following were the results:

Connectivity: The entire hospital and all clinics were on a single Fiber Network which was the only one available in the area. Although there were other providers for Internet access, local fiber was only available from one provider.

Disaster Recovery Site: Their current Business Continuity solution had one of the clinics as a disaster recovery site. This would be disastrous in the event of a fiber network failure, as all locations would go down simultaneously.

Partner Tunnels: Many of their clinical functions required access to their partner networks, which is done through VPN tunnels. This was not provisioned in their current solution.

Medical Services: The primary EMR system was of great concern because their provider would say: “Yes, we are replicating the data and it’s 100% safe, but we cannot test it with you – because, if we do, we have to take the primary system down for a while.” Usually when we hear this, we start thinking “shitshows”. So, we dragged management into it and forced the vendor to run a test. The outcome was a failure. Yes, the data was replicated, and the system could be restored, but it could not be accessed by anyone. The primary reason was the fact that their system replicates and publishes successfully only if the redundant system is on the same network as the primary (an insane – and, sadly – common scenario). A solution to this problem would be to create an “Extended LAN” between the primary site and the backup site.

Telecommunication: The telecommunication system was not a known brand to us, and the manufacturer informed us that the redundancy built into the system only works if both the primary and secondary were connected to the same switch infrastructure.

Solution Proposed

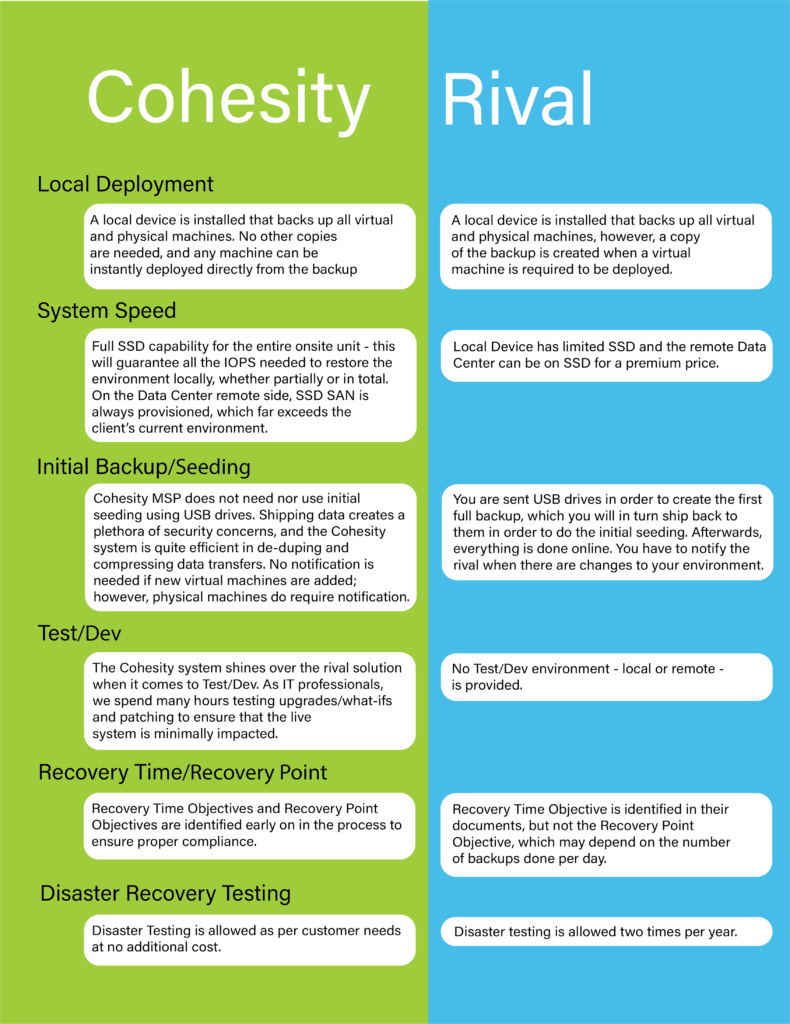

CrossRealms proposed a hot site solution in which three copies of the data and virtual machines will exist: one on their production systems, one on their local network in the form of a Cohesity Virtual Appliance, and one at our Chicago/Vegas Data Centers. This solution allows for instantaneous recovery using the second copy if their local storage or virtual machines are affected. Cohesity’s Virtual Appliance software can publish the environment instantaneously, without having to restore the data to the production system.

The third copy will be used in the case of a major fiber outage or power failure, where their systems will become operational at either of our data centers. The firewall policies and VPN tunnels are preconfigured – including having a read-only copy of their Active Directory environment – which will provide up-to-the-minute replication of their authentication and authorization services.

The following are items still in progress:

- LAN Extension for their EMR: We have created a LAN Extension to one of their clinics which will help in case of a hardware or power/cooling failure at their primary facility. However, the vendor has very specific hardware requirements, which will force the hospital to either purchase and collocate more hardware at our data center, or migrate their secondary equipment instead.

- Telecom Service: They currently have ISDN backup for the system, which will work even in the case of a fiber outage; once the ISDN technology is phased out in the next three years, an alternative needs to be configured and tested. Currently there will be no redundancy in case of primary site failure, which is a risk that may have to be pushed to next year’s budget.

Lessons Learned

The following are our most important lessons learned through working with this client:

- Bringing management on board to push and prod vendors to work with the Business Continuity Team is important. We spent months attempting to coordinate testing the EMR system with the vendor, and only when management got involved did that happen.

- Testing the different scenarios based on the tabletop exercises exposed issues that we didn’t anticipate, such as the fact that their primary storage was Solid State. This meant the backup solution had to incorporate the same level of IOPS, whether local to them or at our data centers.

- Run books and continuous practice runs were vital, as they are the only guarantee of an orderly, professional, and expedient restoration in a real disaster.